ClickHouse and Friends (9) MySQL Real-Time Replication and Implementation

Last updated: 2020-09-08

Many people might think they clicked on the wrong link seeing this title, but no.

ClickHouse can be mounted as a MySQL replica, performing full-then-incremental real-time synchronization of MySQL data. This feature can be described as this year’s most eye-catching and most-needed functionality. Based on it, we can easily build enterprise-level solutions, making OLTP and OLAP integration no longer a headache.

Currently supports MySQL 5.6/5.7/8.0 versions, compatible with Delete/Update statements and most common DDL operations.

The code has been merged into the upstream master branch and is expected to be released as an experimental feature in version 20.8.

After all, this is the integration of two heterogeneous ecosystems. There’s still considerable work to be done, and we look forward to community user feedback to accelerate iteration.

Code Acquisition

Get clickhouse/master code and compile. Method described in ClickHouse and Friends (1) Compilation, Development, Testing…

MySQL Master

We need a MySQL with binlog enabled as master:

1 | docker run -d -e MYSQL_ROOT_PASSWORD=123 mysql:5.7 mysqld --datadir=/var/lib/mysql --server-id=1 --log-bin=/var/lib/mysql/mysql-bin.log --gtid-mode=ON --enforce-gtid-consistency |

Create database and table, then insert data:

1 | mysql> create database ckdb; |

ClickHouse Slave

Currently replication is done at the database level. Different databases can come from different MySQL masters, enabling synchronization of data from multiple MySQL sources to one ClickHouse for OLAP analysis.

First enable the experimental feature flag:

1 | clickhouse :) SET allow_experimental_database_materialize_mysql=1; |

Create a replication channel:

1 | clickhouse :) CREATE DATABASE ckdb ENGINE = MaterializeMySQL('172.17.0.2:3306', 'ckdb', 'root', '123'); |

Check ClickHouse’s synchronization position:

cat ckdatas/metadata/ckdb/.metadata

1 | Version: 1 |

Delete

First execute a delete operation on MySQL Master:

1 | mysql> delete from t1 where a=1; |

Then check records on ClickHouse Slave:

1 | clickhouse :) select * from t1; |

At this point, Data Version in metadata has incremented to 2:

1 | cat ckdatas/metadata/ckdb/.metadata |

Update

MySQL Master:

1 | mysql> select * from t1; |

ClickHouse Slave:

1 | clickhouse :) select * from t1; |

Performance Testing

Test Environment

1 | MySQL 8C16G cloud VM, 192.168.0.3, 10188183 records baseline |

Performance testing is heavily related to hardware environment. Cloud VMs are used here, data for reference only.

Full Synchronization Performance

1 | 8c16G-vm :) create database sbtest engine=MaterializeMySQL('192.168.0.3:3306', 'sbtest', 'test', '123'); |

In this hardware environment, full synchronization performance is approximately 424507/s, 420k transactions per second.

Since full data has no dependencies between rows, it can be further optimized for parallel processing to accelerate synchronization.

Full synchronization performance directly determines ClickHouse slave rebuild speed after failure. If your MySQL has 1 billion records, it can be rebuilt in approximately 40 minutes.

Incremental Performance (Real-Time Synchronization)

Under current configuration, ClickHouse slave’s single-threaded replay consumption capability exceeds MySQL master’s production capability under 256 concurrent connections. Testing shows they maintain real-time synchronization.

benchyou stress test data, 21k transactions/second (MySQL TPS doesn’t go higher in current environment):

1 | ./bin/benchyou --mysql-host=192.168.0.3 --mysql-user=test --mysql-password=123 --oltp-tables-count=1 --write-threads=256 --read-threads=0 |

ClickHouse side single-threaded replay capability, 21k transactions/second, real-time sync:

1 | ┌─count()─┬───────────────now()─┬─_version─┐ |

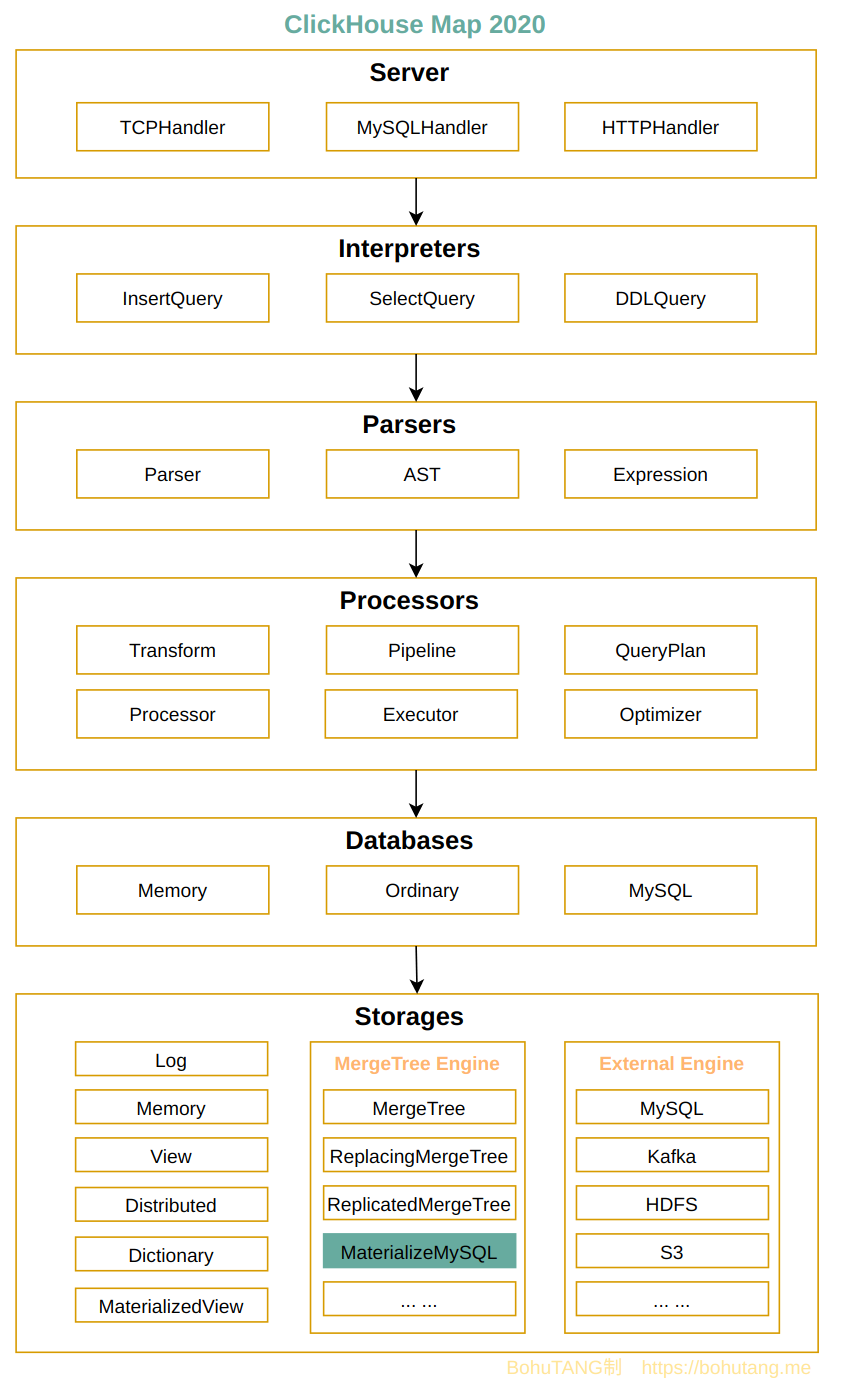

Implementation Mechanism

Before discussing the mechanism, we first need to understand MySQL binlog events. Main types include:

1 | 1. MYSQL_QUERY_EVENT -- DDL |

When a transaction commits, MySQL processes executed SQL into corresponding binlog events and persists them to binlog files.

Binlog is MySQL’s important external output channel. As long as you implement MySQL Replication Protocol, you can stream-consume binlog events produced by MySQL. For specific protocol see Replication Protocol.

Due to historical reasons, the protocol is cumbersome and peculiar, which is not the focus of this article.

For ClickHouse consuming MySQL binlog, there are mainly 3 difficulties:

- DDL compatibility

- Delete/Update support

- Query filtering

DDL

DDL compatibility consumed a large amount of code to implement.

First, let’s see what a MySQL table looks like after replicating to ClickHouse.

MySQL master:

1 | mysql> show create table t1\G; |

ClickHouse slave:

1 | ATTACH TABLE t1 |

You can see:

- Two hidden fields added by default: _sign(-1 delete, 1 insert) and _version(data version)

- Engine converted to ReplacingMergeTree, using _version as column version

- Original primary key field a serves as sorting and partitioning key

This is just one table’s replication. There are many other DDL operations to handle, such as adding columns, indexes, etc. Interested readers can review code under Parsers/MySQL.

Index Conversion

How do MySQL’s corresponding primary keys/indexes map to MaterializeMySQL table structure?

First scan keys in MySQL create table statement, InterpretersMySQLDDLQuery::getKeys:

- Scan unique_key

- Scan primary_key

- Scan auto_increment

Then generate OrderBy tuple expression based on keys in following order, InterpretersMySQLDDLQuery::getOrderByPolicy:

- primary_key[not increment]

- key[not increment]

- unique[not increment]

- unique[increment]

- key[increment]

- primary_key[increment]

ClickHouse currently has only one physical ordering (multiple physical orderings are somewhat planned), determined by OrderBy. So if MaterializeMySQL index is not well utilized, you can use materialized views to create new physical orderings.

Update and Delete

When we execute on MySQL master:

1 | mysql> delete from t1 where a=1; |

ClickHouse t1 data (querying _sign and _version together):

1 | clickhouse :) select a,b,_sign, _version from t1; |

According to returned results, you can see it’s composed of 3 parts.

part1 generated by mysql> insert into t1 values(1,1),(2,2):

1 | ┌─a─┬─b─┬─_sign─┬─_version─┐ |

part2 generated by mysql> delete from t1 where a=1:

1 | ┌─a─┬─b─┬─_sign─┬─_version─┐ |

part3 generated by update t1 set b=b+1:

1 | ┌─a─┬─b─┬─_sign─┬─_version─┐ |

Query with final:

1 | clickhouse :) select a,b,_sign,_version from t1 final; |

You can see ReplacingMergeTree has deduplicated records based on _version and OrderBy.

Query

MySQL master:

1 | mysql> select * from t1; |

ClickHouse slave:

1 | clickhouse :) select * from t1; |

MaterializeMySQL is defined as a storage engine, so during reads it judges based on _sign state. If -1, it’s deleted and gets filtered.

Parallel Replay

Why does MySQL need parallel replay?

Suppose MySQL master has 1024 concurrent writes/updates generating massive binlog events instantly. MySQL slave only has one thread replaying events one after another. So MySQL implemented parallel replay functionality!

So can MySQL slave replay completely (or nearly) simulate master’s 1024 concurrent behavior?

To parallelize, we first need to solve dependency issues: we need master to mark which events can be parallel and which have sequential relationships, since it’s the primary scene.

MySQL adds to binlog:

- last_committed, same means can be parallel

- sequence_number, smaller executes first, describes sequential dependencies

1 | last_committed=3 sequence_number=4 -- event1 |

event2 and event3 can be parallel, event4 needs to wait for previous events to complete before replaying.

The above is just general principle. Currently MySQL has 3 parallel modes to choose from:

- Database-based parallelism

- Group commit-based parallelism

- Primary key conflict-free write set parallelism

Maximally accelerating MySQL slave replay, the entire mechanism is exceptionally complex.

Back to ClickHouse slave problem, we use single-threaded replay, lag is no longer the main issue, determined by their mechanisms:

MySQL slave replay needs to convert binlog events to SQL, then simulate master writes. This logical replication is the most important reason for low performance.

ClickHouse replay directly converts binlog events to underlying block structure, then directly writes to underlying storage engine, close to physical replication. Can be understood as replaying binlog events directly to InnoDB pages.

Read Latest

Although ClickHouse slave replay is very fast, near real-time, how to always read the latest data on ClickHouse slave?

Actually very simple. Leveraging MySQL binlog GTID feature, each read time we do an executed_gtid sync with master, then wait for these executed_gtid to finish replaying.

Data Consistency

For scenarios with high consistency requirements, how do we verify data consistency between MySQL master and ClickHouse slave?

Initial idea is to provide a function compatible with MySQL checksum algorithm. We just need to compare checksum values on both sides.

Summary

ClickHouse real-time replication syncing MySQL data is a 2020 upstream roadmap item. The overall architecture is quite challenging and no one took the ticket. Challenges mainly come from two aspects:

- Very familiar with MySQL replication channels and protocols

- Very familiar with overall ClickHouse mechanisms

Thus, a highway was built between two originally somewhat distant mountains. This 10851 highway was jointly constructed by two road workers: zhang1024 (ClickHouse side) and BohuTANG (MySQL replication), now merged into upstream branch.

Regarding syncing MySQL data, current solutions basically place a binlog consumption tool in the middle. This tool parses events, then converts to ClickHouse SQL statements, writing to ClickHouse server. Long chain, significant performance loss.

10851 highway implements a binlog consumption solution inside ClickHouse, then parses events into ClickHouse internal block structure, directly writing back to underlying storage engine. Almost the most efficient implementation method, achieving real-time MySQL synchronization capability, making analysis closer to reality.

Based on database-level replication, implementing multi-source replication functionality. If replication channel breaks, we just need to delete the database on ClickHouse side and recreate it. Very fast, convenient. OLTP+OLAP is that simple!

To get rich, first build roads!

【Top】ClickHouse MaterializeMySQL Real-Time MySQL Sync Summary